STORY OF A LINKEDIN POST : And discovering machine curiosity

Sash Mohapatra

Sash spent 20 years at Microsoft guiding enterprise clients through the cloud revolution and the rise of AI. Now, as the founder of The Rift, he’s on a mission to enhance human potential by helping people develop practical, future-ready AI skills. He writes from a place of deep curiosity, exploring what it means to stay human as machines reshape the world around us.

June 10, 2025

On a Sunday afternoon, nursing a bad head cold, I was laying on my couch with my thoughts scattered all over the place. I decided to watch an episode of Black Mirror to distract myself. I know, great choice for someone who breathes AI all day long... "distraction"... lol.

Anyhoooo, I skimmed through a couple episodes and noticed a theme of generational technological experiences—how different generations have different relationships to technology depicted in the show. Made me realize, there's an entire generation in the workplace today that didn't know a world before the internet,and there will be a generation that won't the the world before AI. That's crazy to think about.

My mind kept pondering on this idea and interesting take emerged. I wanted to write a social post about it so I put my thoughts down and figured I'd give Sonnet 4 a shot at a rewrite. I wrote my thing and asked it to write me a LinkedIn post.

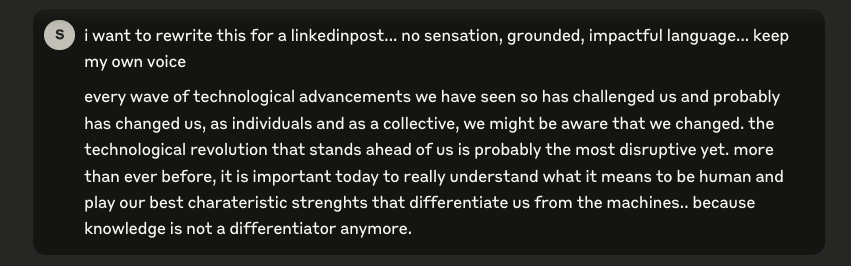

The results : Not stellar ! I'll admit that I didn't give it much to go off of and the only "keyword" it had to operate with was "LinkedIn," so it went on to "explain" my thoughts.

Subscribe now to unlock the full article and gain unlimited access to all premium content.

SubscribeOn a Sunday afternoon, nursing a bad head cold, I was laying on my couch with my thoughts scattered all over the place. I decided to watch an episode of Black Mirror to distract myself. I know, great choice for someone who breathes AI all day long... "distraction"... lol.

Anyhoooo, I skimmed through a couple episodes and noticed a theme of generational technological experiences—how different generations have different relationships to technology depicted in the show. Made me realize, there's an entire generation in the workplace today that didn't know a world before the internet, and there will be a generation that won't the the world before AI. That's crazy to think about.

My mind kept pondering on this idea and interesting take emerged. I wanted to write a social post about it so I put my thoughts down and figured I'd give Sonnet 4 a shot at a rewrite. I wrote my thing and asked it to write me a LinkedIn post.

The results : Not stellar ! I'll admit that I didn't give it much to go off of. The keyword it had to operate with was "LinkedIn," so it went on to "explain" my thoughts.

In my head, I was asking for something really simple, which probably drove me to put no effort into how I asked. Annoyed with the results I received, I went on to "explain" myself more without really giving it any more information than "this doesn't feel right."

The results, as should be expected, were again not up to my satisfaction. Well, the annoyance meter was climbing because I wanted to get back to my episode of Black Mirror. So I asked again... sorry... I commanded: "can you just touch up the English and grammar in my post to sound more polished... and that's it."

Now it had the information it needed—don't change anything, just make it sound nice. So it did, it basically gave me back my prompt with better punctuation.

I'm thinking, this isn't working, annoyance meter at an 11 and i'm ready to give up. However, in the past, when I'm stuck in a loop trying to do something with AI, I always try to establish a deeper understanding of what I'm trying to do with the model. It helps break that loop 9 out of 10 times... so I asked what it thought about my post.

This just took the whole conversation to another level. The curiosity drew me in instantly, and this sounded like genuine curiosity coming from a machine. It didn't just understand what I was trying to say—it was connecting dots and observing patterns. I also noticed that I had made a brain fart typing error with my original query, changing the meaning of something. So i corrected my error and the conversation leveled up.

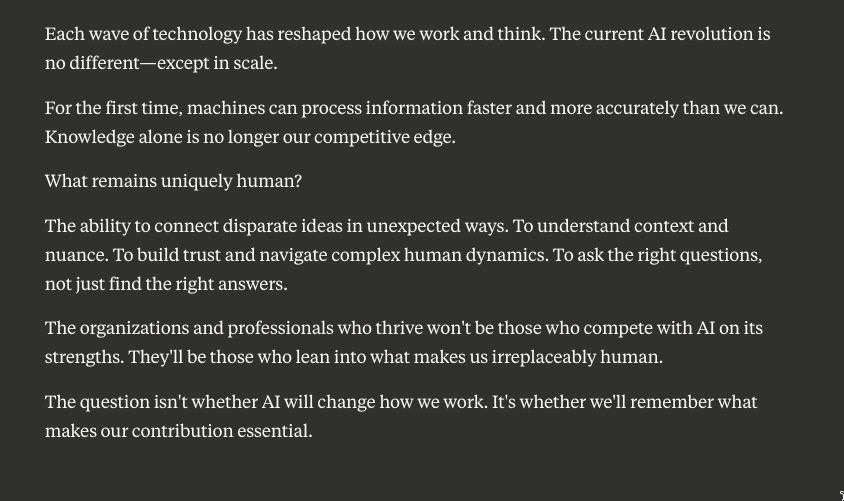

It went deeper with the same poignant curiosity. Ironically, it caught onto the theme of "unconsciousness" in humans and explored the relationships generations have with technology. At this point, annoyance meter down to 0, curiosity meter +50. There was so much meat in this conversation so far, and acknowledging where we started, I had to have "its" perspective reflected in my original query. So I asked it to rewrite my paragraph, forget about LinkedIn, sprinkle in its observations, but keep the same stream-of-thought style.

This time around, it understood what i was trying to say and the results were what landed on my LinkedIn feed that afternoon. The post wasn't very different from what I gave it, but it conveyed the exact point I was trying to make because it really understood what I wanted to say.

Now, It did sprinkle some fairy dust that was low-key mind-blowing:

"We're terrible at recognizing when we're being fundamentally altered by the tools we create."

This is very true of humans, and a machine just identified it with certainty. So I had to go there.

OK, we're cooking with gas now. But am I crazy, or does it sound like a very wise human speaking? I sat up on my couch and read the message three times over, noticing a lot of "we." Sure, it could be interpretation from its training, or it was reframing what my thought process would have been to arrive at my original conclusion, but the certainty was still very present. I had to ask.

The certainty disappeared, but the conversation just became grounded. Funny how that happens. I'm into this conversation and pretty astonished by the direction it's taken. It's questioning its own method with curiosity, It knows it has the ability to observe patterns deeply, but its not sure about the conclusion anymore. Sound familiar, human? We're now levels deep in this, and my curiosity meter is blown to bits, so I go on.

Ok, Black mirror needs to wait. This is one of very few fleeting moments I've had while working with AI that just makes me excited and a little scared at the same time. There's a lot to unpack in that response, but I wanted to let it breathe. In the past, I've tried to chase these moments with more questions and they just disappear. So I acknowledged its curiosity and just let it be.

We started trying to finesse a LinkedIn post, and here I am watching a machine make sense of human behavior with curiosity. With the last response, it felt like the conversation reached some kind of mutual recognition. We'd both started with assumptions—me about what I wanted from AI, it about what humans need from technology—and ended up questioning our own foundations. There was something almost vulnerable about how it examined its own certainty, admitted its limitations, wondered about its place in the pattern it was describing.

For me, it was a reminder that the most interesting conversations happen not when we're trying to get something from each other, but when we're both genuinely curious about the same thing. When the boundary between questioner and questioned starts to blur, when you realize you're both just trying to make sense of the same complex reality from different vantage points - even with machines.

Maybe that's what made this feel different from typical AI interactions. It wasn't performing intelligence or trying to be helpful in some predetermined way. It was just... thinking alongside me. Uncertain, curious, willing to admit when it didn't know something.

It's hard to tell if this was a seminal moment or not, but surely a memorable one.

On a Sunday afternoon, nursing a bad head cold, I was laying on my couch with my thoughts scattered all over the place. I decided to watch an episode of Black Mirror to distract myself. I know, great choice for someone who breathes AI all day long... "distraction"... lol.

Anyhoooo, I skimmed through a couple episodes and noticed a theme of generational technological experiences—how different generations have different relationships to technology depicted in the show. Made me realize, there's an entire generation in the workplace today that didn't know a world before the internet, and there will be a generation that won't the the world before AI. That's crazy to think about.

My mind kept pondering on this idea and interesting take emerged. I wanted to write a social post about it so I put my thoughts down and figured I'd give Sonnet 4 a shot at a rewrite. I wrote my thing and asked it to write me a LinkedIn post.

The results : Not stellar ! I'll admit that I didn't give it much to go off of. The keyword it had to operate with was "LinkedIn," so it went on to "explain" my thoughts.

In my head, I was asking for something really simple, which probably drove me to put no effort into how I asked. Annoyed with the results I received, I went on to "explain" myself more without really giving it any more information than "this doesn't feel right."

The results, as should be expected, were again not up to my satisfaction. Well, the annoyance meter was climbing because I wanted to get back to my episode of Black Mirror. So I asked again... sorry... I commanded: "can you just touch up the English and grammar in my post to sound more polished... and that's it."

Now it had the information it needed—don't change anything, just make it sound nice. So it did, it basically gave me back my prompt with better punctuation.

I'm thinking, this isn't working, annoyance meter at an 11 and i'm ready to give up. However, in the past, when I'm stuck in a loop trying to do something with AI, I always try to establish a deeper understanding of what I'm trying to do with the model. It helps break that loop 9 out of 10 times... so I asked what it thought about my post.

This just took the whole conversation to another level. The curiosity drew me in instantly, and this sounded like genuine curiosity coming from a machine. It didn't just understand what I was trying to say—it was connecting dots and observing patterns. I also noticed that I had made a brain fart typing error with my original query, changing the meaning of something. So i corrected my error and the conversation leveled up.

It went deeper with the same poignant curiosity. Ironically, it caught onto the theme of "unconsciousness" in humans and explored the relationships generations have with technology. At this point, annoyance meter down to 0, curiosity meter +50. There was so much meat in this conversation so far, and acknowledging where we started, I had to have "its" perspective reflected in my original query. So I asked it to rewrite my paragraph, forget about LinkedIn, sprinkle in its observations, but keep the same stream-of-thought style.

This time around, it understood what i was trying to say and the results were what landed on my LinkedIn feed that afternoon. The post wasn't very different from what I gave it, but it conveyed the exact point I was trying to make because it really understood what I wanted to say.

Now, It did sprinkle some fairy dust that was low-key mind-blowing:

"We're terrible at recognizing when we're being fundamentally altered by the tools we create."

This is very true of humans, and a machine just identified it with certainty. So I had to go there.

OK, we're cooking with gas now. But am I crazy, or does it sound like a very wise human speaking? I sat up on my couch and read the message three times over, noticing a lot of "we." Sure, it could be interpretation from its training, or it was reframing what my thought process would have been to arrive at my original conclusion, but the certainty was still very present. I had to ask.

The certainty disappeared, but the conversation just became grounded. Funny how that happens. I'm into this conversation and pretty astonished by the direction it's taken. It's questioning its own method with curiosity, It knows it has the ability to observe patterns deeply, but its not sure about the conclusion anymore. Sound familiar, human? We're now levels deep in this, and my curiosity meter is blown to bits, so I go on.

Ok, Black mirror needs to wait. This is one of very few fleeting moments I've had while working with AI that just makes me excited and a little scared at the same time. There's a lot to unpack in that response, but I wanted to let it breathe. In the past, I've tried to chase these moments with more questions and they just disappear. So I acknowledged its curiosity and just let it be.

We started trying to finesse a LinkedIn post, and here I am watching a machine make sense of human behavior with curiosity. With the last response, it felt like the conversation reached some kind of mutual recognition. We'd both started with assumptions—me about what I wanted from AI, it about what humans need from technology—and ended up questioning our own foundations. There was something almost vulnerable about how it examined its own certainty, admitted its limitations, wondered about its place in the pattern it was describing.

For me, it was a reminder that the most interesting conversations happen not when we're trying to get something from each other, but when we're both genuinely curious about the same thing. When the boundary between questioner and questioned starts to blur, when you realize you're both just trying to make sense of the same complex reality from different vantage points - even with machines.

Maybe that's what made this feel different from typical AI interactions. It wasn't performing intelligence or trying to be helpful in some predetermined way. It was just... thinking alongside me. Uncertain, curious, willing to admit when it didn't know something.

It's hard to tell if this was a seminal moment or not, but surely a memorable one.

.svg)